Touché to TOUCH?!

We have evolved the sense of Touch to Know, to glean information about an object.In this context, a physical object is its own user-interface. It doesn't require a Capacitive or Inductive Touch Screen to probe it and get a pixilated answer on the screen!

Why?

By touching an object we learn about its status. Obtain feedback whether it is hot/cold, rough/smooth, dangerous/safe, clean/dirty, ripe/unripe, etc.

Sometimes we touch objects with a purpose.

- to brush away dirt

- to make indents

- to scratch or scour it off some wanted or unwanted material

Touch as a Mode of Interaction

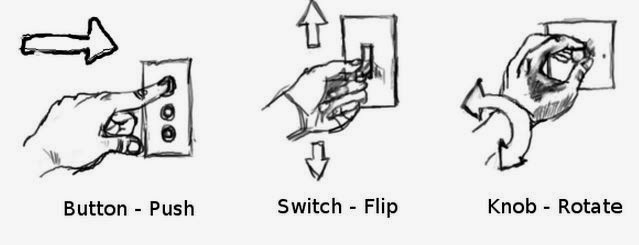

Physical manipulation -- pushing a button, flicking a toggle, pulling a T-handle, turning a knob/wheel, etc. -- was the norm for user-interaction in the industrial age. One literally had to overcome the force of the mechanism (which by the way also provided valuable haptic and kinesthetic feedback, but fatiguing from a muscular effort standpoint) while interacting with them. Thus they were referred to as Machine Cowboy interfaces.

Next came the Analog Professional where the physical effort was made easy due to hydraulics, solenoids and actuators (e.g., Power Steering). And user-interface technology and interaction grammar evolved over time. Now we are in the touch input epoch that has been extended to things such as Fly-by-Wire and Drive-by-Wire. Where an input, say, on a touch screen or joy stick is converted into a digital signal, which, in turn, changes the speed of the HVAC fan in a car or the flaps on the wing of a plane.

Next came the Analog Professional where the physical effort was made easy due to hydraulics, solenoids and actuators (e.g., Power Steering). And user-interface technology and interaction grammar evolved over time. Now we are in the touch input epoch that has been extended to things such as Fly-by-Wire and Drive-by-Wire. Where an input, say, on a touch screen or joy stick is converted into a digital signal, which, in turn, changes the speed of the HVAC fan in a car or the flaps on the wing of a plane.

But when and where did Touch interaction first appear? You would be surprised to learn that the earliest touch interaction was more on the physical continuum and didn't involve a LCD screen; because it was the degree of pressure exerted on the interface was the input!

The world's first touch interface was the Electronic Sackbut. (Follow this link for an illustrated history of Touch Input Technologies).

The world's first touch interface was the Electronic Sackbut. (Follow this link for an illustrated history of Touch Input Technologies).

|

| 1948: The Electronic Sackbut: The right hand controls the volume by applying more or less pressure on the keys; the left hand control four different sound texture options via the control board placed over the keyboard (Courtesy: NPR) |

Now let us compare the Electronic Sackbut's user-interface with an ubiquitous piece of technology of our time, the iPhone.

The iPhone with its multi touch user-interface (e.g., pinch, rotate, swipe, etc.) is a marvel. But there is one big difference between the electronic sackbut and the iPhone. The gateway to touch interaction on the iPhone, the "icons" are filled with semiotic information: Symbols; Signs; Text.

Thus one needs to perceive and interpret the semiotic information, visually and cognitively, before deciding to do something with it. The iPhone certainly is not a problem when visual or cognitive attention are not fragmented, which is not the case when one is multitasking (e.g., driving and using the phone). And, there are many other tasks besides driving, which involve multitasking. For example, it could be a public safety professional such as a police officer who needs to be vigilant about his environment; that is, not be visually tunneled with his eyes riveted on the screen of his radio communication device, compromising his own safety in the process.

The challenges faced in user-interaction during multitasking not only apply to a touch screen, but also for an UI bedecked with an array of physical push-buttons that have similar characteristics.

Another important noteworthy point is that the touch and feel of the icons on the iPhone are one and the same. They don't distinguish themselves from each other on the tactile / haptic / pressure dimension. They all feel the same, even with a haptic vibe, and, thus, provide the same affordances.

The concept of affordance has also been extended to encompass virtual objects. Although, some experts tend to disagree with this definition as it lacks physical feedback. E.g., touching a touch-sensitive icon "affords" an action: a feature or app is opened. Or in a mouse point and click paradigm, icons, radio buttons and TABS are affordances (Figure below).

A pure semiotic interface, with like-affordances, is just not limited to touch screens. But it may also include an array of buttons (same "push" affordance). Although, physically pushing a button, in an array of similar buttons has a tactile / kinesthetic dimension to it, one still needs to cognitively process the icon or label on the button. So in some ways, they are similar to icons arranged in an array on a touch screen, all with the same physical affordances. This indeed can pose a problem in multitasking environments, such as driving, where one may have to visually look at the buttons, perceive the semiotic information and select the appropriate one, and, then, push it.

Ford's move towards replacing virtual touch buttons with physical buttons may yield some performance improvements but may not be a significant one. Due to reasons discussed above: similar affordances, semiotic dependence.

Besides a heavy reliance on semiotic information, there has also been a push towards a reliance on inferential reasoning and separated control-to-display relationships on different planes and surfaces that result in additional cognitive load. The Cadillac CUE Infotainment System (video) is one such example. It illustrates the amount of learning and inferential reasoning required to interact with it.

But there is some good news. There have been some novel ideas about touch screen design for cars. See video below.

Speech interfaces have gained both credibility and popularity (thank you Siri) and gestural interfaces are moving on from gaming apps to other utilitarian technologies such as cars. See figure below.

As we march into the future, be it a car, robot or a treadmill, a semiotically-laden, like-affordances heavy, buttons-galore or touch-only UI, filled with metaphors and inferential reasoning, may not be a good idea. Consider these two examples as my closing statement as to WHY?:

Going forward, we may need a mixed-modal UI that might present multiple ways of interacting with technology to accommodate what comes most naturally to the user based on his/her situation, context and current workload. This also is contingent on the levels of automation and intelligence that might be incorporated in a machine, device or appliance.

In the meantime let's keep in mind, as good as touch screens get to be, their qualities should not be viewed as the "Midas Touch" for user-interaction design.

In closing, every one of us must remember Bill Buxton's primary axiom for design in general and user-interfaces in particular:

|

| iPhone's Touch Interface |

Thus one needs to perceive and interpret the semiotic information, visually and cognitively, before deciding to do something with it. The iPhone certainly is not a problem when visual or cognitive attention are not fragmented, which is not the case when one is multitasking (e.g., driving and using the phone). And, there are many other tasks besides driving, which involve multitasking. For example, it could be a public safety professional such as a police officer who needs to be vigilant about his environment; that is, not be visually tunneled with his eyes riveted on the screen of his radio communication device, compromising his own safety in the process.

Another important noteworthy point is that the touch and feel of the icons on the iPhone are one and the same. They don't distinguish themselves from each other on the tactile / haptic / pressure dimension. They all feel the same, even with a haptic vibe, and, thus, provide the same affordances.

An affordance is a property of an object, or an environment, which allows an individual to perform an action. For example, a knob affords twisting, and perhaps pushing, while a cord affords pulling. (via Wiki)

|

| Varieties of Physical Affordances Some affordances maybe contextually goal driven: e.g., using a hammer as a paper weight. |

The concept of affordance has also been extended to encompass virtual objects. Although, some experts tend to disagree with this definition as it lacks physical feedback. E.g., touching a touch-sensitive icon "affords" an action: a feature or app is opened. Or in a mouse point and click paradigm, icons, radio buttons and TABS are affordances (Figure below).

|

| Virtual Affordances on a Graphical User-Interface (GUI) |

Touching to KNOW vs. Touching to say NO

I began this article by explaining the importance of touching to "know," a naturally evolved human ability that makes interacting with objects in the world intuitive (second nature). Now, contrast this with touching to say No (Figure below).

A pure semiotic interface, with like-affordances, is just not limited to touch screens. But it may also include an array of buttons (same "push" affordance). Although, physically pushing a button, in an array of similar buttons has a tactile / kinesthetic dimension to it, one still needs to cognitively process the icon or label on the button. So in some ways, they are similar to icons arranged in an array on a touch screen, all with the same physical affordances. This indeed can pose a problem in multitasking environments, such as driving, where one may have to visually look at the buttons, perceive the semiotic information and select the appropriate one, and, then, push it.

|

| The array of similar push buttons with same affordances (except for 3 knobs) on this multi band mobile radio used inside a police car provide a physical dimension to the interaction, which is good. But from a semiotic point of view, they are similar to a touch screen and may impose similar visual and cognitive workloads in a driving / multitasking context. (Image via Motorola Solutions) |

Automotive Industry Going-ons with Touch Input

A recent headline was an eye grabber for designers in the automotive and technology worlds:

|

| The Center Stack of a Ford Vehicle. Regardless of the control being virtual or a physical button, there is a heavy reliance on semiotic information, including very similar affordances ("push") (Image and full article at: Extreme Tech) |

Besides a heavy reliance on semiotic information, there has also been a push towards a reliance on inferential reasoning and separated control-to-display relationships on different planes and surfaces that result in additional cognitive load. The Cadillac CUE Infotainment System (video) is one such example. It illustrates the amount of learning and inferential reasoning required to interact with it.

Cadillac CUE Infotainment System

But there is some good news. There have been some novel ideas about touch screen design for cars. See video below.

The Future: Mixed Modal Interactions

Our naturally evolved way of interacting with other humans, animals, objects and artefacts in the world involve touch, speech, gestures, bodily-vocal demonstrations (including facial expressions), among other things. Could a human-machine interface, particularly in a critical piece of technology (medical, critical comms., aviation, automotive, command & control rooms, etc.), be built to be compatible with what's natural to us?Speech interfaces have gained both credibility and popularity (thank you Siri) and gestural interfaces are moving on from gaming apps to other utilitarian technologies such as cars. See figure below.

|

| Drawings from a 2013 Microsoft patent application suggests gestures that would serve, from left, as commands to lower and raise the audio volume and a request for more information.United States Patent and Trademark Office via New York Times |

As we march into the future, be it a car, robot or a treadmill, a semiotically-laden, like-affordances heavy, buttons-galore or touch-only UI, filled with metaphors and inferential reasoning, may not be a good idea. Consider these two examples as my closing statement as to WHY?:

How many of us can recount the experience of inadvertently changing the speed instead of the incline when running on the treadmill? In most treadmills, both these controls have like-affordances (push buttons in ascending order or up/down arrows) and or mirror-imaged on either side of the display. But how many of us when running at 7 mph can distinguish the semiotics (text / symbol) on these buttons?

Or consider the case of Powering-off a Toyota Prius instead of putting it in Park? (both Power and Park controls are "push button" controls with like-affordances!)

|

| Toyota Prius: Power and Park have the same affordances ("push"). When one is not paying sufficient attention, one is prone to commit the "Error of Commission." Pushing one for the other. |

In the meantime let's keep in mind, as good as touch screens get to be, their qualities should not be viewed as the "Midas Touch" for user-interaction design.

In closing, every one of us must remember Bill Buxton's primary axiom for design in general and user-interfaces in particular:

"Everything is best for something and worst for something else."